Predibase

Founded Year

2021Stage

Series A - II | AliveTotal Raised

$28.45MLast Raised

$12.2M | 2 yrs agoMosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

+6 points in the past 30 days

About Predibase

Predibase specializes in fine-tuning and serving large language models (LLMs) within the artificial intelligence and machine learning industries. The company provides a platform for developers to efficiently train and deploy task-specific open-source LLMs using optimized techniques such as quantization, low-rank adaptation, and memory-efficient distributed training. Predibase's infrastructure supports scalable managed deployments in both private clouds and their own serverless environment. It was founded in 2021 and is based in San Francisco, California.

Loading...

Predibase's Product Videos

ESPs containing Predibase

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

The Small Language Models (SLMs) tools and development market focuses on the creation, optimization, and deployment of smaller-scale language models. These models, while less complex and resource-intensive than large language models (LLMs) like GPT-4, offer several advantages including cost-effectiveness, faster deployment times, and reduced computational requirements.

Predibase named as Leader among 11 other companies, including Hugging Face, Sarvam AI, and Arcee.

Predibase's Products & Differentiators

Predibase Platform

Predibase is the fastest way to go from data to deployment on all of your machine learning initiatives. As the first platform to take a low-code declarative approach to machine learning, Predibase makes it easy for data teams of all skill levels to build, iterate and deploy state-of-the-art models with just a few lines of code. Built on the cloud and founded by the team behind popular open-source projects Ludwig and Horovod, Predibase is extensible and designed to scale for modern workloads. Our mission is simple: help data teams deliver more value faster with machine learning.

Loading...

Research containing Predibase

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Predibase in 3 CB Insights research briefs, most recently on Jul 15, 2024.

Jul 2, 2024 team_blog

How to buy AI: Assessing AI startups’ potential

May 24, 2024

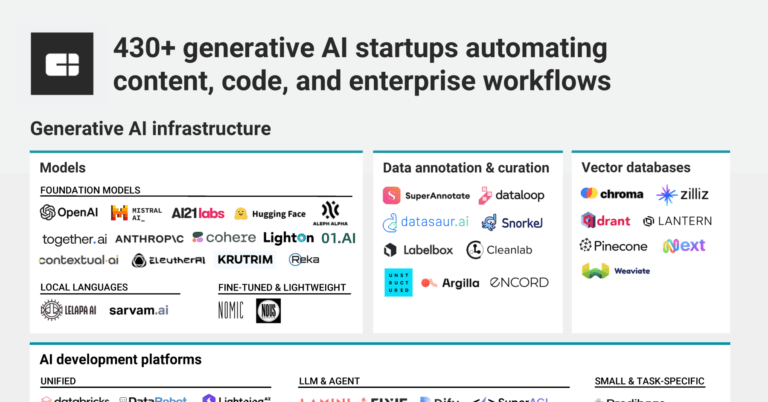

The generative AI market mapExpert Collections containing Predibase

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Predibase is included in 4 Expert Collections, including Hydrogen Energy Tech.

Hydrogen Energy Tech

3,196 items

Companies that are engaged in the production, utilization, or storage and distribution of hydrogen energy. This includes, but is not limited to, companies that manufacture hydrogen, those that convert hydrogen into usable energy, and those that store and distribute hydrogen.

Generative AI

1,298 items

Companies working on generative AI applications and infrastructure.

AI 100 (2024)

100 items

Artificial Intelligence

7,222 items

Latest Predibase News

Feb 6, 2025

The DeepSeek Moment In the rapidly evolving world of AI, the arrival of DeepSeek-R1 marks a pivotal shift. We reached out to founders of five leading AI infrastructure companies in Greylock’s portfolio—Devvret Rishi (Predibase), Tuhin Srivastava (Baseten), Ankur Goyal (Braintrust), Jerry Liu (LlamaIndex), and Alex Ratner (Snorkel AI)—to get their take on DeepSeek and what it means for the future of open-source vs. closed models, AI infrastructure, and GenAI economics. Their insights reveal broad enthusiasm about the advancements DeepSeek brings, with some divergence in perspectives on its real-world impact. Open-Source vs. Closed Models: The Playing Field Has Leveled Historically, proprietary models like OpenAI’s were significantly ahead of open-source alternatives, often by 6-12 months. DeepSeek-R1 has fundamentally closed that gap, demonstrating performance on par with OpenAI’s latest offerings on key reasoning benchmarks, despite its smaller footprint. According to Rishi, “DeepSeek-R1 is a watershed moment for open-source AI. Historically, open-source models trailed proprietary models like OpenAI’s by 6-12 months. Now, DeepSeek-R1 has essentially closed that gap and is on par with OpenAI’s latest models on key reasoning benchmarks despite its smaller footprint.” He believes this is the inflection point where open-source begins to commoditize the model layer. Srivastava echoes this sentiment, stating, “DeepSeek as a moment changes everything. An open-source model is at the same level of quality as the SOTA closed-source models, which previously was not the case. Now with the cat out of the bag, all of the open-source models like Llama, Qwen, and Mistral will be soon to follow.” Goyal offers a more balanced perspective: “DeepSeek is the ‘LLaMa moment’ for O1-style (reasoning) models. In the very worst case, it means that the secret formula is out, and we’ll have a vibrant and competitive LLM market just like we did for GPT, Claude, and LLaMa-style models. In the best case, engineering teams will have a huge diversity of practical options, each with compute, cost, and performance trade-offs that enable them to solve a very wide variety of problems. In all cases, I think the world benefits from this progress.” Ratner echoes the above sentiments about open source and model diversity, focusing on the massive acceleration to enterprises specializing their own AI that this represents: “We’ve always viewed open source models as right behind- algorithms and model architectures rarely stay hidden for long. It was only a matter of time. However: it punctuates the exciting reality that enterprises will have a plethora of performant and cheap LLM options to then evaluate and specialize with their data and expertise, for their unique use cases. I expect DeepSeek to accelerate this trend of specialized enterprise AI massively.” AI Infrastructure and Developer Adoption: The Reinforcement Learning Revolution One of the most striking aspects of DeepSeek-R1 is its use of reinforcement learning (RL) to improve reasoning capabilities. While RL-based LLM optimization has been explored for years, DeepSeek is the first open-source model to successfully implement it at scale with measurable gains, using a technique called Generalized Reinforcement Learning with Policy Optimization (GRPO). Rishi sees this as a game-changer, explaining, “DeepSeek’s most impactful contribution is proving that pure reinforcement learning can bootstrap advanced reasoning capabilities, as seen with their R1-Zero model.” However, he also notes a major gap: “Most ML teams have never trained reasoning models, and today’s AI tooling isn’t built to support this new paradigm.” Srivastava believes that DeepSeek marks an inflection point for AI infrastructure: “With DeepSeek, the moat of ‘we trained the biggest and best model, so we’re keeping it closed’ is gone. Now, you have a situation where frontier model-level quality is available in a model you completely control.” Ratner adds, “We are seeing the ‘AlphaGo moment’ for constrained, verifiable domains (e.g. math, basic coding- things that are simple and well defined to check for correctness), showing the power of LLMs + RL, and the rapid diffusion of algorithmic advancements.” However, he stresses that the next steps will involve expanding this success to more complex and less verifiable domains—a much longer and more challenging journey, involving much more human ingenuity and input. New Applications: AI Reasoning at Scale DeepSeek’s improved reasoning capabilities unlock a new wave of applications. Rishi highlights several emerging possibilities, including: Autonomous AI agents that refine their decision-making over time. Highly specialized planning systems in industries like finance, logistics, and healthcare. Enterprise AI assistants that dynamically adapt to user needs beyond rigid RAG-based solutions. AI-powered software engineering tools that can self-debug and optimize code based on performance feedback. Liu expands on this by discussing the implications for GPU demand and agentic applications: “DeepSeek does not mean that there will be less demand for GPU compute; on the contrary, I’m quite excited because I think it will significantly accelerate the demand and adoption of agentic applications. One of the core issues with building any agent that ‘actually works’ is reliability, speed, and cost—robust agent applications that can e2e automate knowledge workflows require reasoning loops that repeatedly make LLM inference calls. The more general/capable the agent is, the more reasoning loops it requires. While the O1/O3 series of models have impressive reasoning capabilities, they are way too cost prohibitive to use for more general agents. Faster/cheaper models incentivize the development of more general agents that can solve more tasks, which in turn leads to greater demand and adoption across more people and teams.” Srivastava emphasizes, “There are big implications from DeepSeek and the subsequent models that will follow for highly regulated industries. Companies that have strict data compliance requirements will be able to more freely experiment and innovate knowing that they can completely control how data is used and where it’s sent.” Ratner underscores that data remains the real edge: “R1-style progress relies on strong pre/post-training in the relevant domains and rigorous evaluation data before the RL magic kicks in.” He further explains, “Many things in GenAI today effectively reduce to really high-quality, domain-specific labeling, including reinforcement learning. DeepSeek’s results reiterate the point that if you have a good way to label (i.e. a “reward function”), you can do magic; but in most domains, it’s not so easy to get the data or label it.” Economics of GenAI: The Cost Equation Changes DeepSeek accelerates the trend toward cheaper, more efficient inference and post-training, significantly altering the economics of GenAI deployment. Rishi points to Jevon’s Paradox: “As LLMs become cheaper and more efficient, enterprises won’t just replace proprietary APIs with open-source models—they’ll use AI even more, fine-tuning and deploying multiple domain-specific models instead of relying on a single general-purpose one.” Srivastava emphasizes the financial impact, stating, “A reasoning model like R1 will be as much as 7x cheaper than using an OpenAI or Anthropic. Having a model with those economics unlocks many use cases that previously may not have been financially viable or attractive for most enterprises, which are yet to come meaningfully online with Gen AI. Ratner adds, “As the generators become more powerful and commoditized—it’s all about labeling! Whether it’s RLHF, heuristic methods like DeepSeek-R1’s reward functions, or advanced hybrid approaches, defining the acceptance function to match the distribution we want is the logical next step.” DeepSeek is undeniably a milestone in the AI industry, marking the first time an open-source model has reached true competitive parity with proprietary alternatives. Rishi and Srivastava see it as a fundamental shift, ushering in a new era of AI development where companies can fully control high-performing models while benefiting from the economic advantages of open-source. Goyal, however, remains skeptical of its real-world adoption so far, viewing it more as a pricing lever against incumbents than an immediate replacement. Ratner reinforces that while DeepSeek represents meaningful progress, much of the future of AI hinges on high-quality, domain-specific data and labeling. He concludes, “Whether you see it as a paradigm shift or just another step in the AI arms race, its impact on the industry is undeniable.” From Idea to Iconic.

Predibase Frequently Asked Questions (FAQ)

When was Predibase founded?

Predibase was founded in 2021.

Where is Predibase's headquarters?

Predibase's headquarters is located at San Francisco.

What is Predibase's latest funding round?

Predibase's latest funding round is Series A - II.

How much did Predibase raise?

Predibase raised a total of $28.45M.

Who are the investors of Predibase?

Investors of Predibase include Felicis, Greylock Partners, Zoubin Ghahramani, Ben Hamner, Remi El-Ouazzane and 7 more.

Who are Predibase's competitors?

Competitors of Predibase include Baseten and 8 more.

What products does Predibase offer?

Predibase's products include Predibase Platform.

Loading...

Compare Predibase to Competitors

Chalk is a data platform that focuses on machine learning in the technology industry. The company offers services such as real-time data computation, feature storage, monitoring, and predictive maintenance, all aimed at enhancing machine learning processes. Chalk primarily serves sectors such as the credit industry, fraud and risk management, and predictive maintenance. It was founded in 2022 and is based in San Francisco, California.

Clarifai operates an artificial intelligence (AI) company. The company develops technology for operational scale, offering a platform for natural language processing, automatic speech recognition, and computer vision. It helps enterprises and public sector organizations transform video, images, text, and audio data into structured data. It serves electronic commerce, manufacturing, media and entertainment, retail, and transportation industries. The company was founded in 2013 and is based in Willmington, Delaware.

2021.AI focuses on accelerating artificial intelligence (AI) implementations in various industries. The company offers a full-service solution for AI opportunities, from data capture to model deployment, with a strong emphasis on governance, risk, & compliance (GRC) for AI and related data. It primarily serves sectors such as finance and banking, software and tech, manufacturing, transportation, the public sector, and life science. It was founded in 2016 and is based in Copenhagen, Denmark.

Vianai Systems focuses on artificial intelligence (AI) in the software industry. The company offers AI-based solutions that enable businesses to uncover insights in their enterprise systems using natural language and to build machine learning applications. Vianai Systems primarily serves the software industry. It was founded in 2019 and is based in Palo Alto, California.

InData Labs operates as a data science firm and provides artificial intelligence (AI) powered solutions. The company offers consulting and software development services such as predictive analytics, natural language processing, and computer vision. It primarily serves sectors such as electronic commerce, marketing and advertising, logistics and supply chain, gaming and entertainment, digital health, and fintech. The company was founded in 2014 and is based in Miami, Florida.

Explosion is a software company that provides developer tools and solutions for Artificial Intelligence and Natural Language Processing. The company offers products including spaCy, an open-source library for NLP tasks, and Prodigy, an annotation tool for creating training data for machine learning models. Explosion's solutions serve sectors such as biomedical, finance, media, legal, and humanities. It was founded in 2016 and is based in Berlin, Germany.

Loading...