Microsoft Azure

Loading...

ESPs containing Microsoft Azure

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

The AI development platforms market offers solutions that serve as one-stop shops for enterprises that want to develop and launch in-house AI projects. Vendors in this space enable organizations to manage all aspects of the AI lifecycle — from data preparation, training, and validation to model deployment and continuous monitoring — through a single platform in order to facilitate end-to-end model…

Microsoft Azure named as Highflier among 15 other companies, including Google Cloud Platform, Databricks, and IBM.

Loading...

Research containing Microsoft Azure

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Microsoft Azure in 6 CB Insights research briefs, most recently on Jan 8, 2025.

Sep 25, 2024

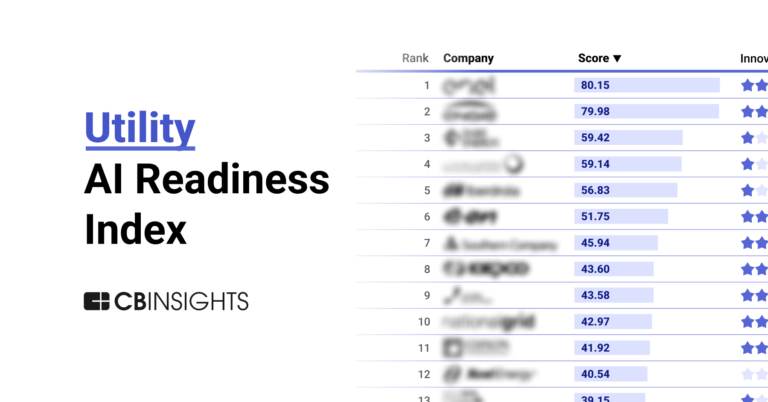

The top 25 utility companies by AI readiness

Sep 13, 2024

The AI computing hardware market mapExpert Collections containing Microsoft Azure

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Microsoft Azure is included in 3 Expert Collections, including Smart Cities.

Smart Cities

2,455 items

Smart building tech covers energy management/HVAC tech, occupancy/security tech, connectivity/IoT tech, construction materials, robotics use in buildings, and the metaverse/virtual buildings.

Cybersecurity

10,544 items

These companies protect organizations from digital threats.

Future of the Factory (2024)

436 items

This collection contains companies in the key markets highlighted in the Future of the Factory 2024 report. Companies are not exclusive to the categories listed.

Latest Microsoft Azure News

Mar 3, 2025

DevOps.com Managing AI APIs: Best Practices for Secure and Scalable AI API Consumption March 3, 2025 Businesses across industries are increasingly integrating AI to streamline operations, gain competitive advantages and unlock new revenue opportunities. A key enabler of this AI adoption is the ability to consume AI models via APIs and, in many cases, expose AI-powered services as APIs. Extending API-driven architectures to AI enables efficient, scalable and well-governed access to AI capabilities. However, managing AI APIs presents unique challenges compared to traditional APIs. Unlike conventional APIs that primarily facilitate structured data exchange, AI APIs often require high computational resources, dynamic access control and contextual input filtering. Moreover, large language models (LLMs) introduce additional considerations such as prompt engineering, response validation and ethical constraints that demand a specialized API management strategy. To effectively manage AI APIs, organizations need specialized API management strategies that can address unique challenges such as model-specific rate limiting, dynamic request transformations, prompt handling, content moderation and seamless multi-model routing, ensuring secure, efficient and scalable AI consumption. GenAI Usage and API Integration Organizations typically deploy and consume generative AI (GenAI) services either through cloud-hosted AI APIs or internally hosted AI models. The former includes publicly available AI services from providers such as OpenAI, AWS Bedrock, Google Vertex AI and Microsoft Azure OpenAI Service, which offer pre-trained and fine-tunable models that enterprises can integrate via APIs. Alternatively, some organizations choose to host AI models on-premises or in private cloud environments due to concerns around data privacy, latency, cost optimization and compliance. This approach often involves open-source models such as Llama, Falcon and Mistral, as well as fine-tuned variants tailored to specific business needs. Regardless of whether an organization is consuming AI externally or hosting its own models, the following considerations are critical: Security: Prevent unauthorized access and ensure that AI services operate within compliance frameworks. Rate Limiting: Manage API consumption efficiently to control costs and prevent excessive usage. Context Filtering and Content Governance: Ensure that AI responses align with ethical standards, brand policies and regulatory requirements. To enforce these controls, external, cloud and internal AI services should be consumed via a gateway, ensuring structured governance, security enforcement and seamless integration across environments. However, in some cases, internal AI APIs may not need to go through the gateway, depending on security policies and deployment architectures. Understanding Ingress and Egress Gateways for AI APIs There are two main types of gateways, namely ingress and egress. For those familiar with Kubernetes, these concepts align with Kubernetes traffic management. Here is how they apply to AI APIs. Ingress API Gateway: It controls how external consumers (partners, customers or developers) access an organization’s AI APIs. It enforces security policies, authentication, authorization, rate limiting and monetization, ensuring controlled API exposure. Egress API Gateway: It controls how internal applications consume external or cloud-based AI services. It enforces governance, security policies, analytics and cost control mechanisms to optimize AI API consumption. Best Practices for AI API Management Whether exposing internal AI services (ingress) or consuming external AI services (egress), organizations must implement best practices to ensure structured, secure and cost-effective API usage. Shared Best Practices for Ingress and Egress AI API Management Enforce Secure Access and Authentication: Use OAuth, API keys, JWT or role-based access control (RBAC) to regulate API access and restrict sensitive AI functionalities. Apply AI Guardrails: Implement content moderation, bias detection, response validation and ethical safeguards to prevent AI misuse. Monitor and Analyze API Traffic: Track usage patterns, response times and failure rates to maintain service reliability and detect anomalies. Ensure Privacy and Compliance: Apply encryption, data anonymization and compliance frameworks (GDPR, HIPAA, AI ethics) to meet regulatory requirements. Implement Token-Based Rate Limiting: Regulate API usage to prevent excessive costs, ensure fair resource allocation and mitigate abuse. Best Practices for Ingress AI API Management When exposing internal AI-powered services to external users, ingress AI API management ensures structured, secure and controlled access. Without proper controls, AI APIs face risks such as unauthorized access, data leakage, scalability challenges and inconsistent governance. The following are some best practices for exposing AI APIs via ingress gateways: Enable a Self-Service Developer Portal: Provide documentation, governance controls and subscription mechanisms for third-party developers. Monitor API Consumption and Performance: Ensure optimal service reliability by tracking request patterns and detecting anomalies. Best Practices for Egress AI API Management As organizations integrate multiple external AI providers, egress AI API management ensures structured, secure and optimized consumption of third-party AI services. This includes governing AI usage, enhancing security, optimizing cost and standardizing AI interactions across multiple providers. Below are some best practices for exposing AI APIs via egress gateways: Optimize Model Selection: Dynamically route requests to AI models based on cost, latency or regulatory constraints. Leverage Semantic Caching: Reduce redundant API calls by caching AI responses for similar queries. Enrich AI Requests with Context: Inject metadata for traceability, personalization and enhanced response accuracy. Beyond Gateways Comprehensive AI API management provides broader governance, encompassing lifecycle management, monitoring and policy enforcement. Key features of AI API management include API marketplaces for discovery and monetization, developer tools such as API testing sandboxes and SDK generators and observability features for tracking usage and debugging. Moreover, it supports model routing to optimize cost and performance, prompts management to standardize AI interactions and compliance tools for enforcing governance policies. Organizations should be able to efficiently manage internal and external AI services across hybrid and multi-cloud environments, ensuring flexibility and scalability. AI API management must align with cloud-native principles to support elastic scaling, security, observability and cost efficiency. Kubernetes enables dynamic scaling, while monitoring tools such as Datadog and OpenTelemetry enhance visibility. Additionally, serverless AI inference helps optimize costs. Leveraging Kubernetes-native API gateways helps organizations to build a scalable, secure and cost-effective AI API ecosystem. Closing Thoughts Managing AI APIs effectively is the key to unlocking real business value without spiraling costs or security risks. Egress API management keeps AI consumption smart and efficient, while ingress API management ensures secure and controlled access. With a cloud-native approach, organizations can scale AI API management seamlessly. By adopting a structured AI API management strategy, organizations can harness AI’s full potential while maintaining security, compliance and efficiency. Whether deploying AI APIs internally or consuming them externally, a well-governed API ecosystem ensures sustainable AI adoption. KubeCon + CloudNativeCon EU 2025 is taking place in London from April 1-4. Register now .

Microsoft Azure Frequently Asked Questions (FAQ)

When was Microsoft Azure founded?

Microsoft Azure was founded in 1975.

Where is Microsoft Azure's headquarters?

Microsoft Azure's headquarters is located at Redmond.

Who are Microsoft Azure's competitors?

Competitors of Microsoft Azure include Databricks, RNV Analytics, VULCAiN Ai, Skytap, Autogon AI and 7 more.

Loading...

Compare Microsoft Azure to Competitors

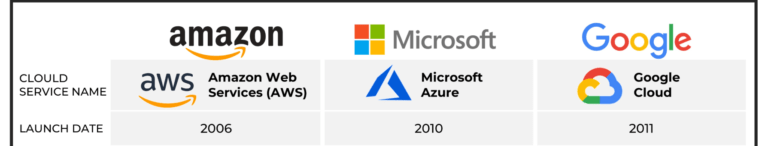

Amazon Web Services specializes in cloud computing services, offering scalable and secure IT infrastructure solutions across various industries. The company provides a range of services including compute power, database storage, content delivery, and other functionalities to support the development of sophisticated applications. AWS caters to a diverse clientele, including sectors such as financial services, healthcare, telecommunications, and gaming, by providing industry-specific solutions and technologies like analytics, artificial intelligence, and serverless computing. It was founded in 2006 and is based in Duvall, Washington. Amazon Web Services operates as a subsidiary of Amazon.

Google Cloud Platform specializes in providing cloud computing services. The company offers a suite of cloud solutions including artificial intelligence (AI) and machine learning, data analytics, computing, storage, and networking capabilities designed to help businesses scale and innovate. Google Cloud Platform caters to various sectors such as retail, financial services, healthcare, media and entertainment, telecommunications, gaming, manufacturing, supply chain and logistics, government, and education. It was founded in 2008 and is based in Mountain View, California.

Cloudera operates in the hybrid data management and analytics sector. Its offerings include a hybrid data platform that is intended to manage data in various environments, featuring secure data management and cloud-native data services. Cloudera's tools are used in sectors such as financial services, healthcare, and manufacturing, focusing on areas like data engineering, stream processing, data warehousing, operational databases, machine learning, and data visualization. It was founded in 2008 and is based in Santa Clara, California.

Alibaba Cloud specializes in cloud computing and data management services within the technology sector. The company offers scalable, secure, and reliable cloud services, including elastic computing, data storage, and database management solutions. Alibaba Cloud caters to a diverse range of customers, including businesses of various sizes, financial institutions, and government entities. It was founded in 2009 and is based in Hangzhou, Zhejiang . Alibaba Cloud operates as a subsidiary of Alibaba.com.

Databricks is a data and AI company that specializes in unifying data, analytics, and artificial intelligence across various industries. The company offers a platform that facilitates data management, governance, real-time analytics, and the building and deployment of machine learning and AI applications. Databricks serves sectors such as financial services, healthcare, public sector, retail, and manufacturing, among others. It was founded in 2013 and is based in San Francisco, California.

DataRobot specializes in artificial intelligence and offers an open, end-to-end AI lifecycle platform within the technology sector. The company provides solutions for scaling AI applications, monitoring and governing AI models, and driving business value through predictive and generative AI. DataRobot serves various industries, including healthcare, manufacturing, retail, and financial services, with its AI platform. It was founded in 2012 and is based in Boston, Massachusetts.

Loading...